The $50B Question Nobody’s Asking About AI’s Future

Let me tell you something wild I realized while scrolling through this week’s tech headlines. We’re witnessing the biggest infrastructure land grab since the railroad boom of the 1800s—except this time, the tracks are made of fiber optic cables, and the locomotives are GPU clusters that consume enough electricity to power small cities.

The numbers are honestly staggering. Microsoft just dropped $10 billion on an AI data hub in Sines, Portugal. Google followed with a €5 billion German expansion. D-Matrix, a startup most people haven’t heard of, just raised $275 million at a $2 billion valuation for AI chips that could make Nvidia nervous. And IBM? They’re building quantum computers that might make today’s data centers look like pocket calculators.

But here’s what keeps me up at night: We’re so focused on the AI models themselves—the ChatGPTs, the Claudes, the promises of artificial general intelligence—that we’re missing the real story. The infrastructure being built right now will determine who controls the digital economy for the next 50 years. And the race is way tighter than you think.

When Your Data Center Eats Solar Farms for Breakfast

Let’s talk about Google’s recent move in Ohio. They signed a 15-year deal with TotalEnergies for 1.5 TWh of solar power—enough to keep the lights on in about 140,000 homes annually. Sounds green and responsible, right? Well, yes, but it’s also a survival strategy.

AI workloads are power-hungry beasts. A single training run for a large language model can emit as much CO₂ as five cars do in their entire lifetimes. And as these models get bigger (and they will), that number is only climbing. The Pew Research Center just published data showing US data centers now add 900,000 tons of CO₂ annually to our atmosphere—equivalent to adding 195,000 gasoline-powered cars to the road every single year.

So when Google inks a solar deal, they’re not just virtue signaling. They’re locking down power supplies before the competition does. It’s the same reason Microsoft is cozying up to Portugal—the country has abundant renewable energy and strategic positioning for serving both European and African markets.

The fascinating part? This is creating a weird new geopolitical dynamic. Countries with abundant clean energy are suddenly tech superpowers. Portugal, with its Atlantic winds and sunshine, is becoming as strategically important as Taiwan is for chip manufacturing. Who would’ve thought?

The Chip War Nobody Saw Coming

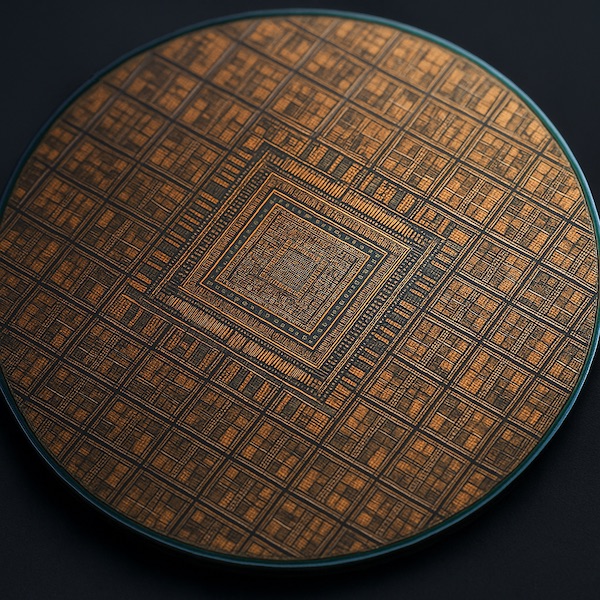

Speaking of chips, let’s talk about D-Matrix’s massive funding round. $275 million from heavy hitters like Microsoft, Qatar Investment Authority, and Singapore’s EDBI. The company’s building inference chips specifically for transformer workloads—those are the mathematical engines behind every modern AI model.

Here’s why this matters: Right now, Nvidia dominates AI compute. Their H100 GPUs are the gold standard, with waiting lists that stretch for months and prices that make CFOs weep. But D-Matrix is betting that specialized chips can run AI inference (the “thinking” part, not the training) more efficiently—using less power, generating less heat, and crucially, costing less.

Think of it like this: Nvidia built a Swiss Army knife that happens to be amazing at AI. D-Matrix is building a dedicated sushi knife. For the specific task of running AI models, that specialization could be revolutionary.

I spoke with a startup CTO last week who told me something chilling: “We’re budgeting more for compute than for salaries next year. If someone can cut that bill by even 20%, we’ll migrate yesterday.” That’s the kind of pressure creating opportunities for D-Matrix and others.

But the real story isn’t just about cost—it’s about control. When Microsoft invests in D-Matrix while also building its own data centers, they’re creating a vertically integrated stack that reduces dependence on Nvidia. Every major tech company is quietly developing this strategy. The GPU shortage taught them a hard lesson: never rely on a single supplier for your most critical resource.

Quantum Computing: The Plot Twist Nobody Expected

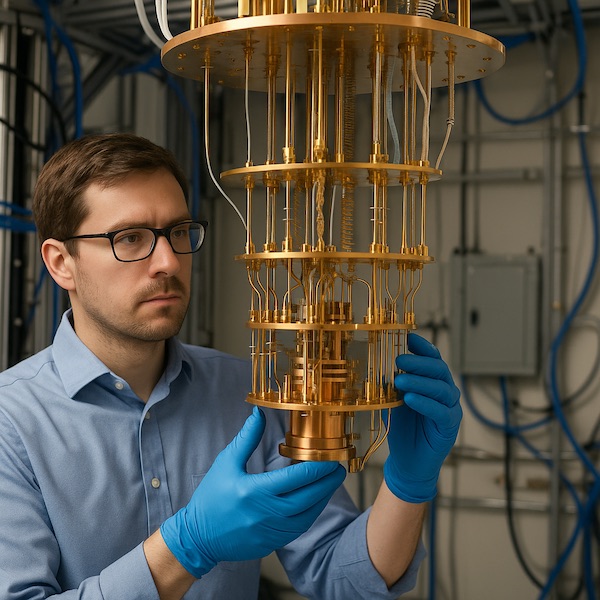

While everyone obsesses over AI, IBM just dropped two quantum computers—Loon and Nighthawk—that might change everything we think about computing. I know, I know, quantum computing has been “10 years away” for 30 years. But stick with me here.

The Loon processor has a unique architecture: each qubit connects to six others, and those connections can move vertically as well as horizontally across the chip. This “breaking the plane” connectivity might solve one of quantum computing’s biggest hurdles—error correction.

IBM has been taking a different path than competitors like Google. Instead of building massive numbers of qubits, they’re focusing on making each qubit more reliable and connected. The Loon chip uses an error-correction method that requires fewer physical qubits per logical qubit than competing approaches.

What does this mean in plain English? They might achieve practical quantum computing with thousands of qubits while others need millions. That’s the difference between building a usable computer and building a warehouse-sized science project.

The Nighthawk processor, meanwhile, can run quantum programs 30% more complex than IBM’s current best. For the first time, we’re seeing quantum computers that might actually solve real problems—like simulating molecular interactions for drug discovery or optimizing complex logistics networks—faster than classical supercomputers.

I talked to a pharmaceutical researcher who told me: “If quantum computers can accurately model protein folding in minutes instead of months, it could shave years off drug development timelines.” That’s not just incremental improvement—that’s revolutionary.

The Invisible Talent Exodus

While infrastructure gets the headlines, there’s a quieter revolution happening in research labs. Yann LeCun, Meta’s chief AI scientist and one of deep learning’s godfathers, is reportedly leaving to start his own company. This isn’t just another executive shuffle—it’s part of a pattern.

Top AI researchers are discovering they don’t need Big Tech’s deep pockets anymore. Cloud compute is accessible. Funding is flowing. And most importantly, they want freedom from corporate pressures to productize everything immediately.

This talent migration is creating a Cambrian explosion of AI startups. Some will fail spectacularly. Others will become tomorrow’s giants. But the dispersion of knowledge itself is valuable—it’s taking AI out of the walled gardens of Google, Microsoft, and Meta and spreading it across the ecosystem.

The irony? The infrastructure investments by these same giants are enabling the exodus. They’re building the roads that competitors will use to challenge them. It’s like Ford paving highways that Toyota uses to sell more cars.

What It All Means for Regular People

Okay, so tech giants are building massive infrastructure. Quantum computers are almost practical. AI chips are proliferating. But what does this mean for someone running a small business or just trying to understand where the world is headed?

First, the cost of AI is about to plummet. When D-Matrix and competitors force Nvidia to compete, when quantum computers handle optimization problems, when data centers become more efficient—these savings will trickle down. The $20/month you’re paying for AI tools today might be $2 in three years.

Second, we’re going to see AI capabilities integrated into everything, everywhere. The infrastructure being built right now is general-purpose. It’s not just for chatbots—it’s for scientific simulation, drug discovery, climate modeling, personalized education, and problems we haven’t even imagined yet.

Third, and most importantly, the decentralization of AI infrastructure is creating opportunities for smaller players. You don’t need Google’s budget to access world-class AI anymore. You just need a credit card and an internet connection.

But there are risks. That 900,000 tons of CO₂ figure? It’s growing fast. The geopolitical tensions over chip manufacturing and energy resources? They’re intensifying. And the concentration of infrastructure in a few hyperscalers’ hands raises legitimate questions about digital sovereignty.

The Real Bottom Line

We’re at an inflection point. The AI infrastructure being built today will be the foundation of the next digital economy—just like fiber optic cables in the 90s or data centers in the 2000s. The winners won’t necessarily be the companies with the best models, but those that control the most efficient, sustainable, and accessible infrastructure.

For entrepreneurs and developers, this is a golden age of access. For investors, it’s a minefield of overvaluation and genuine opportunity. For the rest of us, it’s a reminder that the most important technological revolutions happen invisibly—in server rooms, chip fabs, and power purchase agreements we never see.

The race is on. And honestly? I don’t think we’ve fully grasped how much the world is about to change.